Understanding Video Summarization: The Foundation

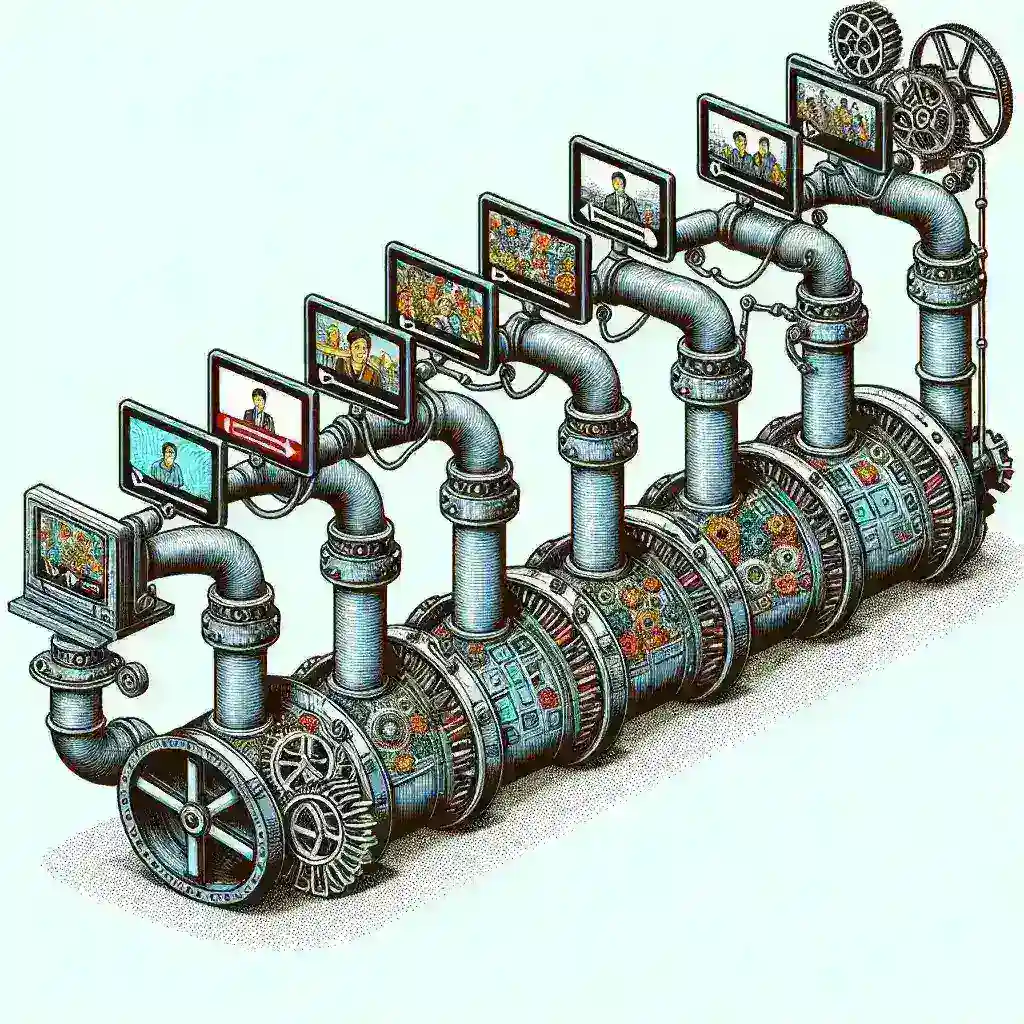

Video summarization has emerged as a critical technology in our data-driven world, where millions of hours of video content are uploaded daily across platforms. Building an effective video summarization pipeline requires understanding the fundamental components that transform lengthy video content into concise, meaningful summaries.

The process involves extracting key frames, identifying important segments, and creating coherent summaries that preserve the essential information while dramatically reducing viewing time. Modern video summarization pipelines leverage machine learning algorithms, computer vision techniques, and natural language processing to achieve these goals.

Core Components of a Video Summarization Pipeline

Video Preprocessing Module

The foundation of any robust video summarization pipeline begins with comprehensive preprocessing. This stage involves several critical steps that prepare raw video data for analysis. Frame extraction serves as the initial step, where individual frames are sampled at specific intervals to create a manageable dataset for processing.

Video format standardization ensures compatibility across different input sources. This includes converting various video formats to a unified standard, adjusting resolution parameters, and normalizing frame rates. Audio extraction runs parallel to video processing, capturing spoken content that will later contribute to summarization decisions.

Feature Extraction Framework

Feature extraction represents the intelligence layer of your pipeline, where raw visual and audio data transforms into meaningful representations. Visual features include object detection, scene classification, and motion analysis. These features help identify significant events, scene transitions, and important visual elements within the video content.

Audio feature extraction focuses on speech recognition, speaker identification, and acoustic event detection. Modern pipelines integrate advanced neural networks to extract high-level semantic features that capture contextual meaning beyond basic visual and audio characteristics.

Machine Learning Architecture Design

Deep Learning Models Integration

Contemporary video summarization pipelines rely heavily on deep learning architectures, particularly convolutional neural networks (CNNs) for visual analysis and recurrent neural networks (RNNs) for temporal understanding. Transformer-based models have revolutionized the field by providing superior attention mechanisms that identify crucial moments in video sequences.

The integration process requires careful consideration of model selection based on your specific use case. Educational content summarization demands different approaches compared to entertainment or news video processing. Custom model training often becomes necessary to achieve optimal performance for domain-specific applications.

Attention Mechanisms and Temporal Analysis

Attention mechanisms serve as the decision-making core of modern summarization systems. These mechanisms learn to focus on important segments while ignoring redundant or irrelevant content. Temporal analysis ensures that the final summary maintains logical flow and chronological coherence.

Self-attention layers help the model understand relationships between different parts of the video, enabling more sophisticated summarization strategies. Multi-head attention allows the system to focus on various aspects simultaneously, such as visual content, audio cues, and textual information when available.

Technical Implementation Strategy

Infrastructure Requirements

Building a production-ready video summarization pipeline demands robust infrastructure capable of handling large-scale video processing. GPU acceleration becomes essential for real-time or near-real-time processing, particularly when dealing with high-resolution video content or complex deep learning models.

Storage architecture must accommodate both raw video files and processed intermediate results. Cloud-based solutions offer scalability advantages, allowing dynamic resource allocation based on processing demands. Consider implementing distributed processing frameworks to handle multiple videos simultaneously.

Software Architecture and Tools

The software stack typically includes several specialized libraries and frameworks. OpenCV provides comprehensive computer vision functionality, while TensorFlow or PyTorch serve as the machine learning backbone. FFmpeg handles video processing operations, and specialized libraries like Librosa support audio analysis.

Containerization using Docker ensures consistent deployment across different environments. Orchestration tools like Kubernetes enable scalable deployment in production environments. API development frameworks facilitate integration with existing systems and user interfaces.

Pipeline Optimization Techniques

Performance Enhancement Strategies

Optimizing video summarization pipelines involves multiple approaches targeting different bottlenecks. Parallel processing allows simultaneous analysis of multiple video segments, significantly reducing total processing time. Batch processing strategies optimize resource utilization by grouping similar operations.

Model optimization techniques include quantization, pruning, and knowledge distillation. These approaches reduce computational requirements while maintaining summarization quality. Edge computing deployment enables local processing, reducing bandwidth requirements and improving response times.

Quality Assurance and Evaluation

Implementing robust evaluation metrics ensures consistent summarization quality. Objective metrics include coverage, redundancy measures, and temporal coherence scores. Subjective evaluation involves human assessors rating summary quality, informativeness, and coherence.

A/B testing frameworks enable continuous improvement by comparing different summarization approaches. User feedback integration creates feedback loops that improve system performance over time. Automated quality monitoring alerts operators to potential issues before they affect end users.

Advanced Features and Customization

Multi-Modal Integration

Advanced video summarization pipelines integrate multiple information sources for enhanced accuracy. Text overlay recognition extracts on-screen text that provides additional context for summarization decisions. Subtitle integration incorporates spoken content analysis, particularly valuable for educational and presentation videos.

Metadata utilization includes video descriptions, tags, and timestamps that provide external context. Social media signals, when available, offer insights into content popularity and relevance. Cross-modal attention mechanisms learn to weight different information sources appropriately.

Domain-Specific Adaptations

Different video domains require specialized approaches for optimal results. Sports video summarization focuses on key plays, goals, and highlights. News video processing emphasizes breaking news segments and important announcements. Educational content summarization identifies key concepts and explanations.

Custom training datasets become crucial for domain adaptation. Transfer learning techniques allow leveraging pre-trained models while fine-tuning for specific domains. Active learning approaches reduce annotation requirements by intelligently selecting training examples.

Deployment and Scaling Considerations

Production Environment Setup

Production deployment requires careful consideration of reliability, scalability, and maintenance requirements. Load balancing distributes processing requests across multiple servers, ensuring consistent performance under varying loads. Monitoring systems track processing times, error rates, and resource utilization.

Backup and recovery procedures protect against data loss and system failures. Version control systems manage model updates and configuration changes. Automated testing pipelines validate system functionality before deploying updates to production environments.

Cost Optimization and Resource Management

Effective resource management balances processing quality with operational costs. Auto-scaling policies adjust computational resources based on demand patterns. Spot instance utilization in cloud environments reduces costs for non-critical processing tasks.

Caching strategies store frequently accessed results, reducing redundant processing. Content delivery networks distribute processed summaries globally, improving access times for end users. Regular performance audits identify optimization opportunities and cost-saving measures.

Future Trends and Emerging Technologies

The video summarization landscape continues evolving with emerging technologies and methodologies. Multimodal large language models promise more sophisticated understanding of video content by combining visual, audio, and textual information in unified frameworks.

Real-time summarization capabilities enable live video processing for streaming applications. Edge AI deployment brings summarization capabilities closer to content sources, reducing latency and bandwidth requirements. Federated learning approaches enable collaborative model improvement while preserving privacy.

Interactive summarization systems allow users to customize summary length, focus areas, and presentation styles. Explainable AI features help users understand why specific segments were selected for inclusion in summaries. Integration with augmented reality and virtual reality platforms opens new application possibilities.

Conclusion

Building a comprehensive video summarization pipeline requires careful planning, technical expertise, and ongoing optimization. Success depends on understanding your specific use case requirements, selecting appropriate technologies, and implementing robust evaluation frameworks. The investment in developing these systems pays dividends through improved content accessibility, reduced viewing times, and enhanced user experiences across various applications and industries.